|

|

|

|

|

|

|

|

|

|

Visual Object Classes Challenge 2008 (VOC2008)

|

|

|

|

|

|

|

|

|

|

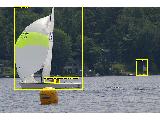

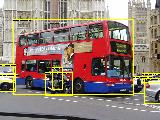

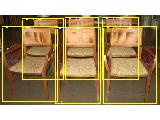

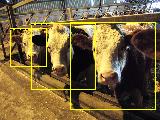

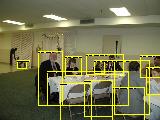

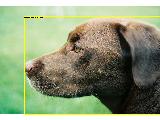

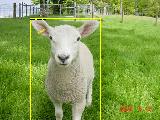

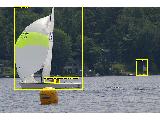

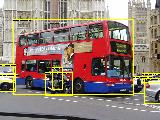

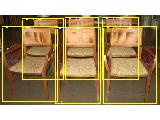

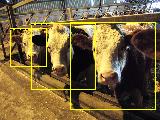

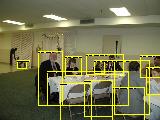

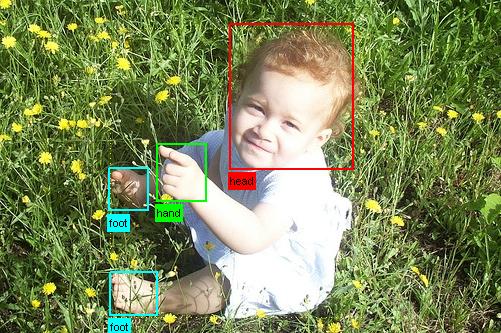

| [click on an image to see the annotation] | |||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| [click on an image to see the annotation] | |||||||||

The goal of this challenge is to recognize objects from a number of visual object classes in realistic scenes (i.e. not pre-segmented objects). It is fundamentally a supervised learning learning problem in that a training set of labelled images is provided. The twenty object classes that have been selected are:

There will be two main competitions, and two smaller scale "taster" competitions:

| 20 classes | ||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Participants may enter either (or both) of these competitions, and can choose to tackle any (or all) of the twenty object classes. The challenge allows for two approaches to each of the competitions:

The intention in the first case is to establish just what level of success can currently be achieved on these problems and by what method; in the second case the intention is to establish which method is most successful given a specified training set.

| Image | Objects | Class | |

|

|

|

|

| Image | Person Layout | |

|

|

|

Participants may enter either (or both) of these competitions.

To download the training/validata data, see the development kit. In total there are 10,057 images [further statistics].

The training data provided consists of a set of images; each image has an annotation file giving a bounding box and object class label for each object in one of the twenty classes present in the image. Note that multiple objects from multiple classes may be present in the same image. Some example images can be viewed online.

Annotation was performed according to a set of guidelines distributed to all annotators.

The data will be made available in two stages; in the first stage, a development kit will be released consisting of training and validation data, plus evaluation software (written in MATLAB). One purpose of the validation set is to demonstrate how the evaluation software works ahead of the competition submission.

In the second stage, the test set will be made available for the actual competition. As in the VOC2007 challenge, no ground truth for the test data will be released until after the challenge is complete.

The data has been split into 50% for training/validation and 50% for testing. The distributions of images and objects by class are approximately equal across the training/validation and test sets. In total there are 10,057 images. Further statistics are online - statistics for the test data will be released after the challenge.

Example images and the corresponding annotation for the main classification/detection tasks, segmentation and layout tasters can be viewed online:

The development kit consists of the training/validation data, MATLAB code for reading the annotation data, support files, and example implementations for each competition.

There were errors in the 14-Apr-2008 release of the training/validation data as follows:

The errors will not affect evaluation, but participants wanting to take advantage of the "don't care" label (without having to compute it themselves) should download the patch, which contains updated image lists, and can be untarred over the original development kit:

The test data for the VOC2008 challenge is now available. Note that the only annotation in the data is for the layout taster challenge - disjoint from the main challenge. Full annotation will be released after the workshop at ECCV.

The test data can now be downloaded from the evaluation server. You can also use the evaluation server to evaluate your method on the test data.

Participants are expected to submit a single set of results per method employed. Participants who have investigated several algorithms may submit one result per method. Changes in algorithm parameters do not constitute a different method - all parameter tuning must be conducted using the training and validation data alone.

Details of the required file formats for submitted results can be found in the development kit documentation.

The results files should be collected in a single archive file (tar/zip) and placed on an FTP/HTTP server accessible from outside your institution. Email the URL and any details needed to access the file to Mark Everingham, me@comp.leeds.ac.uk. Please do not send large files (>1MB) directly by email.

Participants submitting results for several different methods (noting the definition of different methods above) may either collect results for each method into a single archive, providing separate directories for each method and an appropriate key to the results, or may submit several archive files.

In addition to the results files, participants should provide contact details, a list of contributors and a brief description of the method used, see below. This information may be sent by email or included in the results archive file.

For participants using the provided development kit, all results are stored in the results/ directory. An archive suitable for submission can be generated using e.g.:

Participants not making use of the development kit must follow the specification for contents and naming of results files given in the development kit. Example files in the correct format may be generated by running the example implementations in the development kit.

If at all possible, participants are requested to submit results for both the VOC2008 and VOC2007 test sets provided in the test data, to allow comparison of results across the years. In both cases, the VOC2008 training/validation data should be used for training i.e.

The updated development kit provides a switch to select between test sets. Results are placed in two directories, results/VOC2007/ or results/VOC2008/ according to the test set.

The main mechanism for dissemination of the results will be the challenge webpage.

For VOC2008, the detailed output of each submitted method will be published online e.g. per-image confidence for the classification task, and bounding boxes for the detection task. The intention is to assist others in the community in carrying out detailed analysis and comparison with their own methods. The published results will not be anonymous - by submitting results, participants are agreeing to have their results shared online.

Detailed results of all submitted methods are now online. For summarized results and information about some of the best-performing methods, please see the workshop presentations:

If you make use of the VOC2008 data, please cite the following reference (to be prepared after the challenge workshop) in any publications:

@misc{pascal-voc-2008,

author = "Everingham, M. and Van~Gool, L. and Williams, C. K. I. and Winn, J. and Zisserman, A.",

title = "The {PASCAL} {V}isual {O}bject {C}lasses {C}hallenge 2008 {(VOC2008)} {R}esults",

howpublished = "http://www.pascal-network.org/challenges/VOC/voc2008/workshop/index.html"}

The VOC2008 data includes images obtained from the "flickr" website. Use of these images must respect the corresponding terms of use:

For the purposes of the challenge, the identity of the images in the database, e.g. source and name of owner, has been obscured. Details of the contributor of each image can be found in the annotation to be included in the final release of the data, after completion of the challenge. Any queries about the use or ownership of the data should be addressed to the organizers.

We gratefully acknowledge the following, who spent many long hours providing annotation for the VOC2008 database: Jan-Hendrik Becker, Patrick Buehler, Kian Ming Chai, Miha Drenik, Chris Engels, Jan Van Gemert, Hedi Harzallah, Nicolas Heess, Zdenek Kalal, Lubor Ladicky, Marcin Marszalek, Alastair Moore, Maria-Elena Nilsback, Paul Sturgess, David Tingdahl, Hirofumi Uemura, Martin Vogt.

The preparation and running of this challenge is supported by the EU-funded PASCAL Network of Excellence on Pattern Analysis, Statistical Modelling and Computational Learning.